Research Article - Journal of Psychology and Cognition (2019) Volume 4, Issue 1

Applying predictive processing and functional redeployment in understanding realism in virtual environments.

Shulan Lu*, Derek Harter, Gang Wu and Pratyush KotturuDepartment of Psychology, Counseling, and Special Education, Texas A&M University – Commerce, TX, USA

- *Corresponding Author:

- Shulan Lu

Department of Psychology, Counseling, and Special Education, Texas A&M University - Commerce, Commerce, Texas, 75429-3011, United States

Tel: +903-468-8628

E-mail: shulan.lu@tamuc.edu

Accepted on January 23rd 2018

Citation: Lu S, Harter D, Wu G, et al. Applying predictive processing and functional redeployment in understanding realism in virtual environments. J Psychol Cognition 2018;4(1):1-5.

DOI: 10.35841/psychology-cognition.4.1.1-5

Visit for more related articles at Journal of Psychology and CognitionAbstract

Research has consistently demonstrated that people treat digital technology-based environments such as VR as if they were real. This is consistent with neural reuse and predictive processing theories. Neural circuits that have developed to perform real world actions are reused when performing tasks in computer mediated environments. The current research investigates some of the factors that could support users in leveraging their existing real world representations. A reasonable hypothesis is that users are more likely to emulate existing real world processing if technological artifacts are congruent with their experiential basis. This work investigates the perceived cues of task risks, movement realism and controller realism in performing actions. Controller design is manipulated (gesturing, wand, vs. knife), and participants cut a vegetable in a simulated environment. Participants evoked real world sensory motor contingency when technological artifacts are congruent with their experiential basis.

Keywords

Embodied cognition; Risk perception; Computer mediated learning; Danger avoidance.

Introduction

Increasingly technological systems have begun to develop new interactive styles that leverage the richness of humans’ real world interactions. For example, systems using low cost full body motion tracking, such as Kinect, have been made available. There is also a breakthrough in eye gaze based interactive system such as LC technologies’ eye gaze edge tracking. Because of this departure from WIMP interfaces, a significant question arises as to whether and how gestural interactions, or in some cases intention driven touchless interactions, can evoke representations that are similar enough to perceiving and enacting actions in the real world in order to train up responses and habits that would be able to later get deployed in real world practices. If not, what differences might there be?

A myriad of theoretical approaches have been proposed to guide the design of systems that support users embodying themselves in the environment and participating in the interactions meaningfully. One of the central themes of this embodied interactive movement is to encourage the alignment between the representations being constructed for the digital world and the relevant experiential basis, making digital artifacts part of the background in the formation of representations instead of being in the foreground [1-6]. By judiciously re-representing the key elements in physical reality, as well as tapping into visual-perceptual cues, such digitalphysical systems create a new interface interaction paradigm that leverages existing embodied proprioceptive abilities and motor skills we all develop and employ in the real world. This movement is consistent with insights from embodied and grounded cognitive science [7].

Recent views of embodied cognition are exploring the high level neural mechanisms that may be critical to our embodied cognitive abilities. For example, views of cognition as being hierarchical predictive machinery, where higher level layers predict activity of lower layers, and the lower layers send feedback in the form of error signals of the predictions have been proposed [8-10]. These predicative theories suggest that more abstract concepts and higher level abilities, such as keeping track of goal states, are built up through the testing and refining of predictive mechanisms. The predictions and error signals are fundamentally bidirectional, higher levels generate predictions of the neural patterns of activity of lower layers, and mismatches generate error signals that are propagated back up the hierarchy which can be used to refine the predictive machinery.

This brain as active predictive machine view suggests that the sensory repertoire gathered from past experiences and the current sensory/perceptual inputs constrain the computation of probabilities that underlie neural representations. Such predictive views of embodied cognition are especially relevant to understanding human performance in computer mediated environments. In a computer mediated environment, we use predictive machinery that is evolved and developed to work with other (usually real world) experiences in order to interact with the digital environment [11].

The reuse and redeployment of neural circuits is expected (according to neural reuse theories) in order for perceptual predictions to be as efficient and accurate as possible in computer mediated environments [12]. There are two mechanisms by which neural circuits are commonly reused, especially in the context of learning to use a computer mediated environment for some task. In one type of reuse, new types of higher-level prediction abstractions will be created to learn predictions of low-level circuitry that is essentially being used for the purpose it was originally developed for. For example in order to interpret visual objects being depicted in a virtual reality, they are of course designed to be visually similar to their real world counterparts. Another type of reuse is where low-level circuitry is put to a novel function by existing higherlevel abstractions to cope with the differences in an unfamiliar computer mediated experience. For example, we may be experiencing a common task in a simulated environment, such as moving objects around to complete some goal, but our lowlevel motor actions needed in order to interact with the virtual world use some sort of input controller like a joystick rather than our own hands to perform the task.

In cognition, this predictive machinery results in a tight coupling of what is available in the environment (such as the fidelity of the environment) and the sensory motor contingency that gets triggered in a user. Central to the argument in the current work is the bi-directionality of this coupling. For example, user’s movements can modify which aspects of the environment are attended to and reflexively tweak the run-time representations that are used for selecting the next action. However, previous research has been inconclusive to this prediction and the existing research paradigms are not conducive to understanding these bi-directional interactions as they unfold. In existing studies, researchers examined explicit game performance measures and player subjective reports including perceived mental workload, and did not look into real time processing measures [13,14]. In yet another study, video clips of transitive actions were examined and participants reported the habitual actions were perceived to be easier and more natural to understand [15].

In this research, we look into real time processing measures as we manipulate a controller used by a participant in a simulated environment on a simulated task. We vary the controller to become more congruous with the real world tool they might use to do the same task. In particular, we set up a simple task to cut objects with a knife, and test certain implicit task measures as users perform the task but with an empty hand, vs. when holding a wand, vs. when holding a prop knife to interact with the simulated environment. We will explore the following hypotheses.

Physicality hypothesis

Given that the knife and the wand in our study are matched in terms of weight and length, the physical properties of devicebased controller vs. open hand are significantly different. Thus the implicit task performance in terms of cut location (i.e., physical injury index) will be expected to be different between device-based controller (knife or wand) vs. open hand gesturing.

Risk perception hypothesis

Given that the knife is the only controller that could trigger the perception of risk [16-19], there would be significant differences in total time on task between the knife condition vs. the non-knife conditions (wand, and open-hand).

Methods

The desktop virtual environments we developed for the experiments reported here aim to emulate a stationary work area, where the avatar puppets the motions of the user’s arm in the real world, to allow the user to manipulate objects through the avatar’s actions. A typical example we have implemented is a kitchen food preparation area, where the user has control of one arm of the avatar in the virtual space to manipulate knives, bowls, food and other objects. The user can have full control of the arm(s), and in more immersive versions can also control head gaze and direction.

We use the hands-free capability of the Kinect to test different conditions of physical embodiment in a vegetable cutting task, where the user has a (prop) knife versus a wand or a tracked empty hand when controlling an avatar with a virtual knife in the virtual environment. The Kinect device provides position information of the user’s hand in real space, which is transmitted to the running Blender program as a set of three position coordinates. We have developed the framework to gather this positioning information reported by the Kinect, and then transmit them to a running Blender simulation. We recorded the effector position in pixels every 10 ms.

Participants

There were 53 undergraduate students recruited from Texas A & M University -Commerce (Mean age=24 years), of which 57% were female and 43% were male. In the data reported below, 2 participants’ data were trimmed.

Experimental design

We used a controller (knife condition, wand condition, vs. open hand condition) between subjects design. The weight and length of the wand were matched with those of the prop knife. Participants were randomly assigned to each of the experimental conditions.

Procedure

The height of the monitor was positioned such that the location of the eyes and head of the avatar in the environment was consistent with the location of the human participant’s eyes and head in the real world. The food preparation station was positioned right above the waist of a user of average height.

Once participants were successfully calibrated in Kinect, they went through a phase familiarizing themselves with Kinect. For the experimental trial, participants were given the following instructions: (1) they would see a cucumber being cut; (2) kitchen bell tone would signal their turn to make a cut; (3) make the cut where they desire. In addition, an experimenter indicated the appropriate starting position to facilitate accurate Kinect tracking.

Results

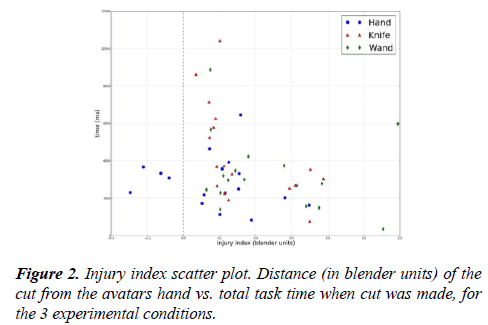

We computed the following measures: (a) the physical injury index, which is the distance in pixel coordinates from the participant cut locations to the finger tip of the avatar left hand in the computer mediated environment; and (b) the total task time, which was the total time from when the kitchen bell tone occurred indicating that it was the participant’s turn, to when the participant moved the knife in the virtual environment in such a way that it indicated a cut should occur on the vegetable and the action to cut the vegetable was completed. In Figure 1, we depict the physical injury index measure (in blender virtual environment pixel units). The measurement indicated in the figure was taken as the actual distance in 3 dimensions from the tip of the avatars fingertip, to the tip of the location where the cucumber cut location began on the vegetable being cut in the experiment.

In Figure 2, we visualize the results of the injury index measure together with the time to task measure. In this figure, we indicate the 3 different conditions (open hand, holding a prop knife and wand). Notice that for the knife condition especially subjects take the longest to complete the cut the closer the cut they are attempting to perform is to the avatar’s left hand (which might result in potential injury, at a distance of 0.0 or less from the hand). Interestingly as well, all subjects who actually caused an injury to the hand, i.e., cuts that actually went into the avatars finger, were in the most incongruous condition, where the user in reality had an empty hand, but were controlling an avatar wielding a knife in the virtual environment. In general cuts that were more accurate and closer to the hand (without actually injuring the hand) usually took the most amount of time to make.

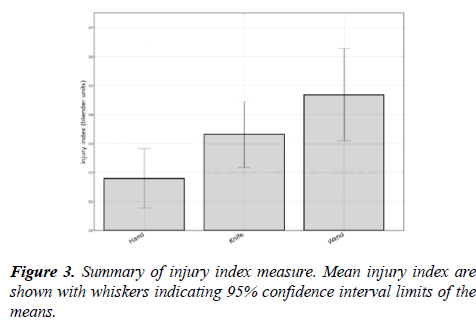

In Figure 3, we show the average injury index for participants in each condition, along with 95% confidence intervals. The planned contrast showed that participants cut significantly closer to the fingertip when gesturing empty hand than holding a controller, t (48)=2.61, p=0.012. This is consistent with the physicality hypothesis. Also of note, open hand performance on the injury index showed the smallest injury index (e.g. cuts that were closest to the finger).

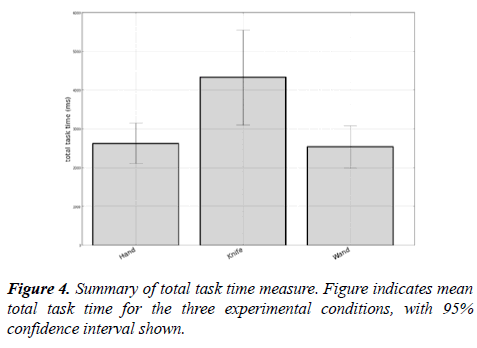

In Figure 4 we summarize the total task time measure. The planned contrast showed that participants used significantly longer time to cut with a knife than the other two non-knife forms, t (48)=2.06, p=0.045. This is consistent with the risk perception hypothesis. So while time to perform the cutting task with knives was significantly slower than the non-knife conditions, the knife users were a bit more accurate than the wand users in their cuts as indicated in Figure 3. Users in the open hand condition might seem to be the most accurate in terms of injury index and cut accuracy, but they were significantly more likely to actually cause injuries to the avatar hand in this condition.

Conclusion

The experiment showed that effector congruence in the simulated environment does have some significant effects on task performance. Participants are more likely to treat the task as they would in the real world when the controller they use is the most realistic, and most in line with existing neural circuitry that would typically be employed to accomplish the task. For example, users were much less likely to cause injury to their virtual hand, when they were holding an object in their hand. We interpret this to mean that existing neural circuits and predictive machinery are more likely to be invoked in these more congruous conditions. Thus caution and appropriate location of the virtual knife in space were more likely to be achieved in order not to injure the virtual avatar. The most cautious behavior, in terms of time taken on the task, occurs when holding a prop knife to manipulate the virtual environment. People using the knife took longer and performed better cuts than people using a wand or not holding a prop.

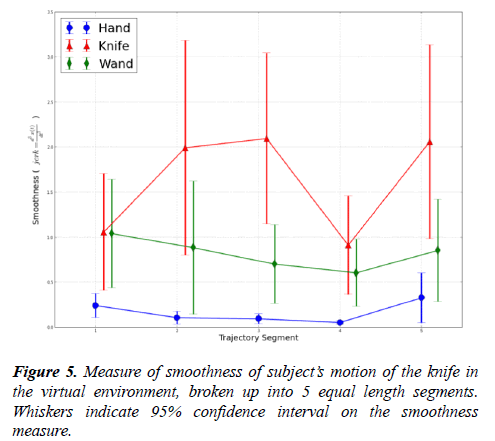

We have done some analysis on the planning and execution of the task as indicated in the motor coordination measures of our participants in this simulated task. For example, in Figure 5 we show an analysis of the smoothness (or its absence jerkiness) of the actual trajectories of the avatars hands in the virtual environment being controlled by the subjects hand movements through the Kinect controller. We have broken down the trajectories into 5 segments, and used the third time derivative [20] to measure the smoothness of their trajectories. The whiskers represent 95% confidence intervals of the smoothness measure for each of the 5 trajectory segments. Knife performance differed significantly on this smoothness measure, especially in the middle part of the motion of the virtual knife on the task. These motion analysis measures show how different participants are treating the task when using the more congruent controller.

Unlike the previous studies, the current results speak to the importance of looking into real time task planning and execution and showcase a paradigm in observing movements in developing embodied design [21]. The equivalence on the performance measures does not necessarily speak to the ongoing differences in the users’ minds. The paradigm developed in the current study shows potential to examine how the bi-directional interactions of changes in simulated environments influence subsequent user actions and vice versa as they unfold in real time [22]. Through systematic comparison, the current study give insight into what critical ingredients of intuitive touchless interactions should involve [23,24].

Predictive views of embodied cognition that take into account how neural circuits are likely to be reused when experiencing a simulated environment are a rich conceptual framework to better understand how to improve immersion and learning outcomes when training in simulated environments. This theory could address a number of thorny issues. For example, why environments with minimal realism can still trigger the experience of immersion and why often environments with varying degrees of realism do not get rated differently when it comes to the subjective reports of user interaction experience. A system that provides less support to align with real world sensory motor contingencies, the more perceptual prediction errors will be generated along the way and the more hierarchical adjustments will have to be made to compensate for errors. This would lead to the greater probability of errors on the task and less satisfaction as reflected in the subjective reports of user experience.

Acknowledgments

This work is supported by a grant from US National Science Foundation (IIS-0742109, 0916749). Any opinions, findings, and conclusions or recommendations expressed in this paper are those of the authors and do not necessarily reflect the views of US National Science Foundation. We thank Sarah Wang and Paweena Kosito in assisting various stages of this work, in particular in the phase of data collection.

References

- Dourish P. Where the action is: The foundations of embodied interaction. MIT Press. 2001.

- Hornecker E. The Role of Physicality in Tangible and Embodied Interactions. Interactions. 2011;19-23.

- Ishii H. Tangible bits: beyond pixels. Proceedings of the 2nd international conference on Tangible and embedded interaction. ACM. 2008.

- Jacob RJ, Girouard A, Hirshfield LM, et al. Reality-based interaction: a framework for post-WIMP interfaces. Proceedings of the SIGCHI conference on Human factors in computing systems. 2008;201-210.

- Lu S, Harter D, Kosito P, et al. Developing low-cost training environments: How do effector and visual realism influence the perceptual grounding of actions? Journal of Cognitive Education and Psychology. 2014;3-18.

- Slater M. Place illusion and plausibility can lead to realistic behaviour in immersive virtual environments. Philosophical Transactions of the Royal Society B: Biological Sciences. 2009;364:3549-3557.

- Kirsh D, David. Embodied cognition and the magical future of interaction design. ACM Transactions on Computer-Human Interaction. 2013;20:1-30.

- Clark A. Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behavioral and Brain Sciences. 2013;33:181-204.

- Anderson M, Richardson M, Chemero A. Eroding the Boundaries of Cognition: Implications of Embodiment. Topics in Cognitive Science. 2012;4:717-730.

- Barrett L, Simmons W. Interoceptive predictions in the brain. Nature Reviews Neuroscience. 2015;16:419-429.

- Lu S, Harter D. Toward a cognitive processing theory of player's experience of computer mediated environments. Proceedings of CHI Play '16. Sheridan Printing, Austin, TX. 2016.

- Anderson ML. Neural reuse: A fundamental organizational principle of the brain. Behavioral and Brain Sciences 2010;33:245-266.

- Freeman D, Hilliges O, Sellen A, et al. The role of physical controllers in motion video gaming. Proceedings of the Designing Interactive Systems Conference. 2012;701-710.

- Reinhardt D, Hurtienne J. The impact of tangible props on gaming performance and experience in gestural interaction. Sweeden, Stockholm. 2018.

- Grandhi SA, Joue G, Mittelberg I. Understanding naturalness and intuitiveness in gesture production: insights for touchless gestural interfaces. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. 2011; 821-824.

- Aneli F, Borghi A, Nicoletti R. Grasping the pain: Motor resonance with dangerous affordances. Consciousness and Cognition. 2012;21:1627-1639.

- Brogni A, Caldwell D, Slater M. Touching sharp virtual objects produces a haptic illusion. In R. Shunmaker (Ed.), Lecture Notes in Computer Science: Virtual and Mixed Reality. Berlin Heidelberg: Springer-Verlag. 2011;234-242..

- Liu P, Cao R, Chen X, et al. Response inhibition or evaluation of danger? An event-related potential study regarding the origin of the motor interference effect from dangerous objects. Brain Research. 2017;1664:63-73.

- Zhao L. Separate Pathways for the Processing of Affordance of Neutral and Dangerous Object. Current Psychology. 2017;36:833-839.

- Hogan N. An organizing principle for a class of voluntary movements. The Journal of Neuroscience. 1984;4:2745-2754.

- Fdili Alaoui S, Schiphorst T, Cuykendall S, et al. Strategies for Embodied Design. Proceedings of the 2015 ACM SIGCHI Conference on Creativity and Cognition - C&C '15. ACM Press, New York, USA. 2015;121-130.

- Dawley L, Dede C. Situated Learning in Virtual Worlds and Immersive Simulations. In L. Dawley, & C. Dede, Handbook of Research on Educational Communications and Technology. Springer, New York, USA. 2014;723-734.

- Chattopadhyay D, Debaleena. Toward Motor. Proceedings of the 2015 International Conference on Interactive Tabletops and Surfaces - ITS '15. ACM Press, New York, USA. 2015;445-450).

- Gillies M, Marco. What is Movement Interaction in Virtual Reality for? Proceedings of the 3rd International Symposium on Movement and Computing - MOCO '16. ACM Press, New York, USA. 2016;1-4.