Research Article - Biomedical Research (2017) Volume 28, Issue 14

An efficient biometric feature extraction using CBIR

Betty Paulraj1*, Mohana Geetha D2 and Jeena Jacob I3

1Department of Computer Science and Engineering, Kumaraguru College of Technology, Coimbatore, Tamil Nadu, India

2Department of Electronics and Communication Engineering, SNS College of Technology, Coimbatore, Tamil Nadu, India

3Department of Computer Science and Engineering, SCAD College of Engineering and Technology, Tirunelveli, Tamil Nadu, India

- *Corresponding Author:

- Betty Paulraj

Department of Computer Science and Engineering

Kumaraguru College of Technology

Tamil Nadu, India

Accepted on June 07, 2017

Abstract

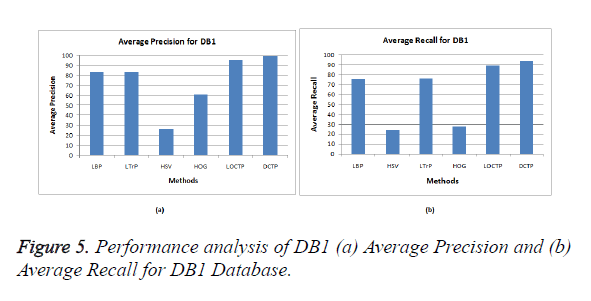

Authentication of face is a considerable challenge in pattern recognition since the face can undergo a large variety of changes in illumination, facial expression and aging. This paper proposes Diverse- Chromatic Texture Pattern (DCTP), a technique for effective feature extraction which aids face recognition through Content-Based Image Retrieval (CBIR). It extracts the spatio-chromatic information of an image by generating three sequences of patterns from inter-channel information of an image. This produces three different successions of diversified chromatic feature vectors which extract the unique information from each interactive plane (RGB, GBR and BRG) of an image. The information is extracted by forming different sequences of patterns according to the position of mid-pixel. The analysis made in CASIA database (DB1) shows significant improvement over the previous works like Local Binary Pattern (LBP) (91.75%/75.18%), Local Tetra Pattern (LTrP) (91.64%/76%) and Local Oppugnant Color Texture Pattern (LOCTP) (99.21%/89.38%) as 99.67%/93.47% in terms of Average Precision/ Average Recall. The analysis on Indian Face Database (DB2) shows the result of DCTP is improved from LBP (78.64%/57.35%), LTrP (79.84%/56.8%) and LOCTP (82.64%/58%) to 84.06%/ 58.7%.

Keywords

Diverse-chromatic texture pattern, Content-based image retrieval, Face recognition, Biometric authentication.

Introduction

Biometric authentication can be done effectively using Content-Based Image Retrieval (CBIR) techniques. The feature extraction plays a vital role in CBIR, whose effectiveness depends on the adopted methods for the extraction of features from the test and trained images. This extraction relies on the features such as color [1-12], shape [12-24] and texture [25-50]. The works based on color features are many, including the Conventional Color Histogram (CCH) [1] like HSV color histogram [2,3], the Fuzzy Color Histogram (FCH) [4], the Color Correlogram (CC) [5-7], colorshapebased features [9] and color-texture-based features [26-28]. Some more comprehensive and extensive literature survey on color-based CBIR is presented in [6,8-12]. The texture is represented by properly defined primitives (microtexture) and spatial arrangements (macrotextue) of the microtexture. Texture measures extract the visual patterns of the images and their spatial definition. Many organisations and individuals are motivated and have developed many retrieval systems like SIMPLIcity [51], QBIC [52], Netra [53], PicToSeek [54], PhotoBook [55], etc. This paper proposes DCTP, a local chromatic-texture descriptor which is formulated based on LBP. The local image descriptor, LBP is used widely because of its tolerance against monotonic illumination changes and more computational simplicity. The layout of this paper is as follows. Section 2 describes the related works. Section 3 gives the proposed work. Section 4 elaborates on the experimental analysis and finally, the paper concludes in Section 5.

Related Works

Texture is a salient feature for CBIR which cannot be defined easily. Texture feature analysis methods have been divided into two groups: (i) statistical or stochastic approach and (ii) structural approach. In statistical approach [26,30], the textures are treated as statistical measures of the intensities and positions of pixels. Human texture discriminations in terms of texture statistical properties are investigated in [56]. The works related to statistical texture measures are difference histograms and co-occurrence statistics [57-59]. In the structural approach [48,60-62], the concept of texture primitives are used which are often called texels or textons. Different kinds of texture features like mean and variance of the wavelet coefficients [63], Gabor wavelet correlogram [64], rotated wavelet filters [65], dual tree complex wavelet filters (DT-CWFs) [66], dual tree rotated complex wavelet filters (DT-RCWFs), rotational invariant complex wavelet filters [33], discriminative scale invariant feature transform (D-SIFT) [48] and multi-scale ridgelet transform [43] are proposed for texture image retrieval by many researchers. Mathematical morphology [67,68] is a powerful tool for structural texture analysis. Also, researchers used Gabor Wavelet [69], Wavelet packet [70-80] and Gaussian mixtures [45] for texture feature extraction.

A structural approach, local image descriptor performs well for texture-based image retrieval. LBP [48,81-83] is the first introduced complementary measure for local image descriptor. Although the LBP is invariant to monotonic changes of the gray scale pixels, the local contrast supplements an independent measure for it. Many other variations on LBP [42-49] are also proposed by researchers. The LBP is extended to the three-valued code called the Local Ternary Pattern [71] and also to the non-directional first-order local pattern operator of higher order ((n-1)th-order) called Local Derivative Pattern [49]. Another extension of LBP, Local Tetra Pattern [50], depicts the spatial structure of the local region in an image by using the direction of the center gray pixel. An enhancement of LTrP, Local Oppugnant Color Texture Pattern (LOCTP) [80], distinguishes the information extracted from the inter-chromatic texture patterns of different spectral channels within a region.

The Proposed Work

Many texture-based descriptors are proven to be effective for feature extraction. Many researches [65,66,72,73] show that combining other features along with it may give better result. Also, researches are done in perceiving the images with human color vision [74], and they suggest to work on separated color spaces [75,76], by which human perception can be incorporated into the image retrieval. These research dimensions motivated us to work on local descriptors and inter-channel information of color spaces. In DCTP, three pattern sequences are formed based on the pixel position. It uses buffer value and two color plane pixel information. The intensity information is gathered between the mid and neighbouring pixels.

The contributions of this work include

1. The local information is extracted in terms of spatiochromatic texture feature. This is done by extracting the features in inter-channel planes (RB, GR and BG).

2. Three different sequences of patterns are extracted instead of a single pattern, which extracts more reliable feature. Three different features are extracted from RGB plane, GBR plane and BRG plane. Thus, it encapsulates all the possible interactive features of an image.

3. The introduction of buffer value, β helps to maximize the effect of feature vector.

4. The analysis is done in two benchmark datasets, CASIA Face Image Database Version 5.0 [77] and Indian Face database [78].

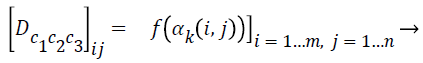

The DCTP code gives the inter-relation between the pairs of color spaces. The RGB image is converted into three components (R, G and B components). Values from any two component images are used for further calculations. In c1-c2-c3 interactive plane, c1 and c3 color spaces are used (For example, Red and Blue information are used for RGB plane, Green and Red information are used for GBR plane and Blue and Green information are used for BRG plane). Thus, the information of an image in all possible interactions can be retrieved in three different feature vectors. The feature vector for c1-c2-c3 interactive plane is calculated by using Equation (1). This extracts the informationn of an image in R-G-B, G-B-R and BR- G interactive planes. Each feature vector carries the pixel information of an image by forming three different sequences of patterns based on the pixel position. The DCTP code of an image which is in c1-c2-c3 plane in the ith row and the jth column can be calculated by

(1)

(1)

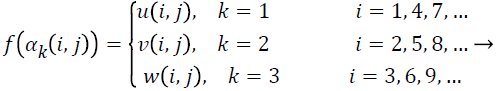

The DCTP code (D c1c2c3) provides the inter-relation between the neighboring pixels which are taken from various color models. The f(αk(i,j)) gives the pattern of the pixel information in the (i,j)th position of the image. Row value i varies from 1 to m, and column value j varies from 1 to n. k gives the row information of the image which is taken for pattern sequence formation. If k is 1, the mid-pixel is taken from first series of rows (i=1,4,7,…) If k is 2, the mid-pixel is taken from second series of rows (i=2,5,8,…) and If k is 3, the mid-pixel is taken from third series of rows (i=3,6,9,…). Equations (2) to (8) are used for calculating the f(αk(i,j)).

(2)

(2)

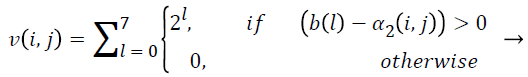

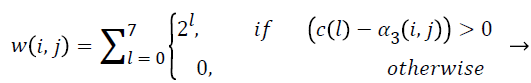

The value of f(αk(i,j)) varies for different row pixels. Based on the row, different sequences of patterns are created. Since the pixel information of each pattern sequence is different, different variables are used for each row-wise calculation such as u(i,j), v(i,j) and w(i,j). u(i,j) is calculated for rows 1,4,7,..., v(i,j) is calculated for rows 2,5,8,... and w(i,j) is calculated for rows 3,6,9,... using Equations (3-5) respectively.

(3)

(3)

(4)

(4)

(5)

(5)

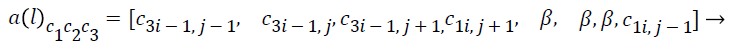

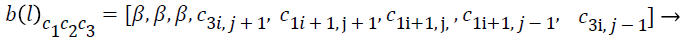

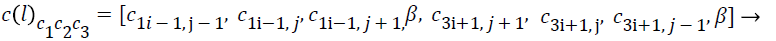

The values a(l), b(l)and c(l) provide the local neighbourhood pixel values for calculating the DCTP code of each center pixel in RGB plane by using Equations (6-8). The same values for other planes can be found out by replacing the corresponding color values. The ak(i, j) gives the corresponding mid-pixel values in the pattern.

The pattern P1 carries mid-pixel value as α1(i,j) and neighbourhood pixel values as a(l), the pattern P2 carries mid-pixel value as α2(i,j) and neighbourhood pixel values as b(l) and the pattern P3 carries mid-pixel value as α3(i,j) and neighbourhood pixel values as c(l). The values of center pixels αk(i,j) also should be replaced with values according to the position of it. α1(i,j) should be replaced with c1 component of α(i,j), α2(i,j) should be replaced with buffer value (ßand α3(i,j) should be replaced with c3 component of α(i,j). Thus, each element provides the inter-relation of the Red, Green and Blue information in each pixel. In a pattern sequence, a center pixel is taken in one plane and the neighbouring pixels are taken from the same or different planes. For maximizing the effect of feature extraction, a ßvalue is inserted in the specified position of the sequence of pattern, which carries the value nearing zero. In this work, zero is used for ß.

(6)

(6)

(7)

(7)

(8)

(8)

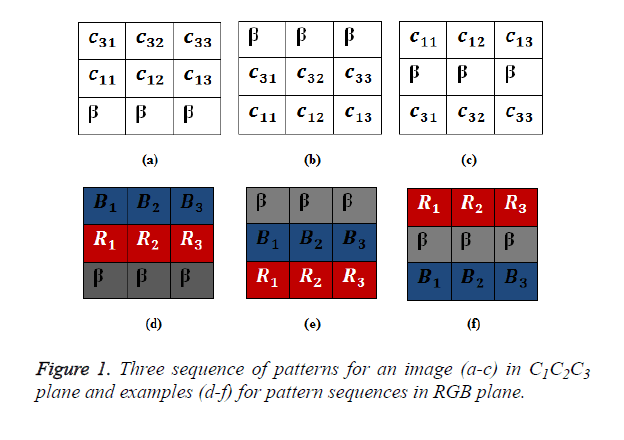

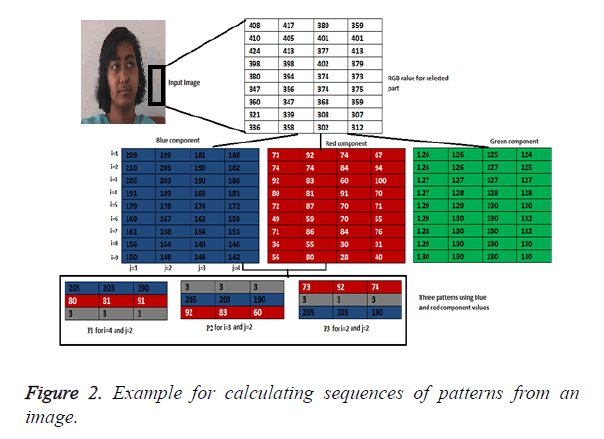

Figure 1 shows the arrangement of pattern sequences for a specified row with examples which are retrieved using Equations (6-8). Figure 1a shows the pattern arrangement of rows 1,4,7,..., Figure 1b shows the pattern arrangement of rows 2,5,8,... and Figure 1c shows the pattern arrangement of rows 3,6,9,..., Figures 1d-1f show the respective examples in RGB plane. Figure 2 shows the example for arranging the pattern sequences in an image. The lower part of Figure 2 shows the sequences of patterns from which the unique vector is calculated for replacing the original pixel value in that position. The values of u(i,j), v(i,j) and w(i,j) which we get from Equations (6-8) are replaced in Equations (3-5). Since the subtraction operation is used between center pixels and neighbourhood pixels, the distinctiveness will be maximized when lower value of ß is used. l varies from 0 to 7. The DCTP code (D c1c2c3) is generated by finding the f(αk(i,j)) of the corresponding planes by replacing αk(i,j) with u(i,j), v(i,j) and w(i,j) in the specified column position. Thus, DCTP code calculation provides three different codes, one from RGB plane, one from GBR plane and another one from BRG plane. This provides inter-relation of different chromatic values such as RB, GR and BG. This makes the path for effective feature extraction since it yields the relation of one color space with respect to the other one and also the relationship between neighbourhood pixels in one plane and center pixel in another plane.

Experimental Analysis

The experimental analysis is done to evaluate the existing approaches with the proposed scheme. The experiments are done on two different databases which vary in nature, CASIA Face Image Database Version 5 (DB1) [77] and Indian Face Database (DB2) [78]. The major findings of this study are that texture features can be represented well by the distribution of a pixel in relation with the neighbouring pixel in the other planes. Since the proposed work is a local descriptor and extracts texture information in inter-channel planes, it is analysed with state-of-the-art texture-based local descriptors such as LBP and LTrP. Also it is analysed with a pure color-based feature extraction method (HSV), a pure texture-based descriptor (HOG) and an inter-channel texture descriptor, LOCTP.

Experiment 1

In Experiment 1, database DB1 is used, which consists of 500 different images from CASIA Face Image Database Version 5 [77]. The intra-class variations include illumination, pose, expression, eye-glasses, imaging distance, etc. The size of each image is 640 × 480. Figure 3 shows some example images from DB1 database.

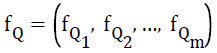

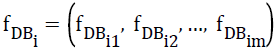

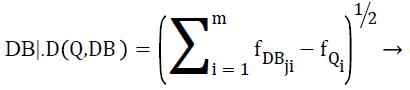

For this experiment, each image in the database is used as the query image. For each query image (Iq), the system collects n database images X=(x1,x2,…xn) with the shortest image matching the distance computed using Equation (9). If the retrieved image xi belongs to the same category as that of the query image then it is said that the system has appropriately identified the expected image, else the system has failed. Feature vector for query image Q represented as,  is typically obtained after the feature extraction. Similarly, each image in the database is represented with feature vector

is typically obtained after the feature extraction. Similarly, each image in the database is represented with feature vector  . The goal is to select the n best images that resemble the query image. This involves the selection of n top matched images by measuring the distance between query image and images in the database |

. The goal is to select the n best images that resemble the query image. This involves the selection of n top matched images by measuring the distance between query image and images in the database |

(9)

(9)

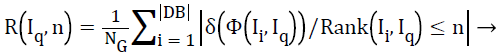

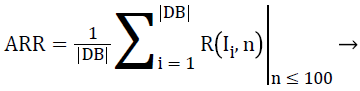

where fDBji is the ith feature of the jth image in the database | DB|. Image with less distance is considered as more relevant to query. The analysis of the proposed scheme is done in terms of average precision, average recall and average retrieval recall or average retrieval rate (ARR). For the query image Iq, the precision is defined as follows.

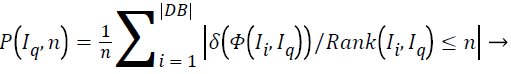

(10)

(10)

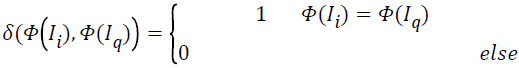

where ‘n’ indicates the number of retrieved images, Φ(x) is the category of x, Rank(Ii,Iq) returns the rank of image Ii (for the query image Iq) among all images of |DB| and

.

.

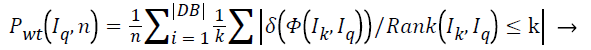

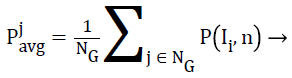

In the same manner, the weighted precision and recall are defined as

(11)

(11)

(12)

(12)

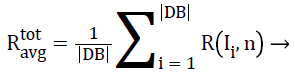

The average precision for the jth category and total average recall of the reference image database are given by Equations (13) and (14) respectively.

(13)

(13)

(14)

(14)

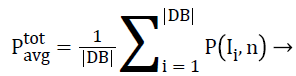

Finally, the total average precision and ARR for the whole reference image database are computed by Equations (15) and (16) respectively.

(15)

(15)

(16)

(16)

Table 1 gives the Mean ± SD of features. The table gives the information for the first six columns of six face class images. From the information, it is clear that the features show significant difference between the classes. Thus, the query retrieval becomes easier. Table 2 gives precision for top number of images retrieved, and Table 3 shows the average precision and average recall of DB1 database images.

| Class | Feature 1 | Feature 2 | Feature 3 | Feature 4 | Feature 5 | Feature 6 |

|---|---|---|---|---|---|---|

| Class 1 | 643.3 ± 63.7 | 3652.4 ± 297.9 | 3.7 ± 1.4 | 6.9 ± 3.4 | 2.9 ± 1.4 | 47.0 ± 8.0 |

| Class 2 | 1686.0 ± 107.8 | 4007.8 ± 80.2 | 14.9 ± 4.4 | 10.8 ± 4.1 | 8.3 ± 4.1 | 34.1 ± 10.5 |

| Class 3 | 797.7 ± 70.2 | 2652.7 ± 234.4 | 6.7 ± 3.2 | 7.0 ± 3.9 | 4.2 ± 2.1 | 29.7 ± 6.2 |

| Class 4 | 540.8 ± 54.2 | 1510.1 ± 123.4 | 4.3 ± 1.1 | 2.6 ± 0.7 | 2.1 ± 1.2 | 13.6 ± 2.4 |

| Class 5 | 773.5 ± 65.6 | 2128.6 ± 198.3 | 7.3 ± 3.3 | 5.9 ± 2.9 | 4.4 ± 1.8 | 23.1 ± 5.4 |

| Class 6 | 467.8 ± 45.3 | 1160.9 ± 132.4 | 3.5 ± 1.2 | 2.7 ± 1.1 | 1.8 ± 1.1 | 8.1 ± 1.2 |

Table 1. Mean SD of features.

| Methods | Top number of images retrieved | ||||

|---|---|---|---|---|---|

| 5 | 10 | 15 | 20 | 25 | |

| HOG | 60.6 | 50.4 | 43.74 | 38.95 | 35.18 |

| HSV | 26.52 | 24.85 | 23.79 | 22.79 | 21.58 |

| LBP | 91.75 | 87.37 | 83.72 | 79.88 | 75.18 |

| LTrP | 91.64 | 87.55 | 83.86 | 80.03 | 75.52 |

| LOCTP | 99.21 | 97.72 | 95.78 | 93.39 | 89.38 |

| DCTP | 99.67 | 98.83 | 97.6 | 96.08 | 93.47 |

Table 2. Precision (in %) for top number of images retrieved in DB1 database.

| Methods | Average Precision | Average Recall |

|---|---|---|

| HOG | 60.6 | 35.18 |

| HSV | 26.52 | 21.58 |

| LBP | 91.75 | 75.18 |

| LTrP | 91.64 | 76 |

| LOCTP | 99.21 | 89.38 |

| DCTP | 99.67 | 93.47 |

Table 3. Average Precision and Average Recall of DB1.

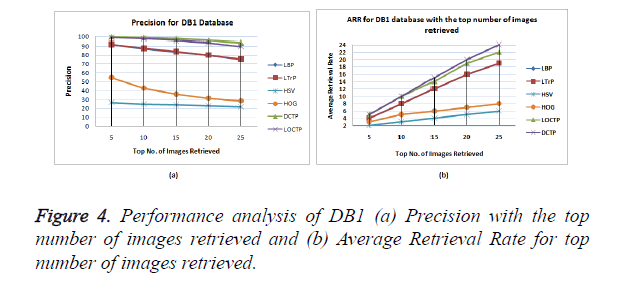

The performance analysis of the LBP, LTrP, LOCTP, HOG, HSV and DCTP is given in Figures 4 and 5. From these figures it is evident that the DCTP gives considerable improvement over the other methods. For DB1, the average precision for DCTP gives an improvement of 7.94 from LBP, 8.05 from LTrP, 55.3 from HOG and 72.78 from HSV histogram, and average recall for DCTP gives improvement of 18.29 from LBP, 17.47 from LTrP, 72.22 from HOG and 71.89 from HSV histogram. Thus, this work provides significant result in the DB1 database. The ARR of the proposed work also shows significant improvement.

Experiment 2

In Experiment 2, images from the Indian Face database [78] have been used. For this experiment, 1000 images have been collected to form database DB2. Figure 6 shows some examples of images in DB2 database. The resolutions of this database images are changed into 128 × 128 for computational purpose, and one normal face of each subject is used for training. This experiment uses 240 expression variant and 357 pose variant faces. The Indian Face database consists of samples of poses up to 180° rotation angle. Each image is analysed as making all others as the training image.

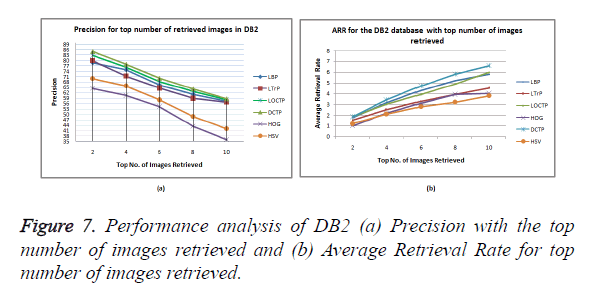

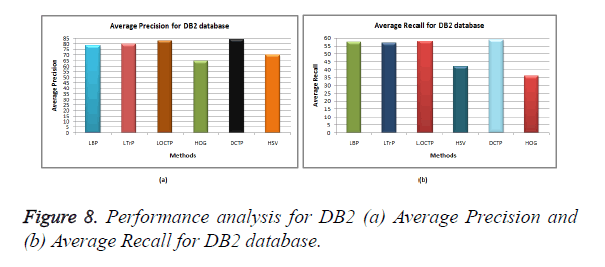

Table 4 gives precision for top number of images retrieved in DB1 database. Table 5 shows the average precision and average recall of database DB2. Figures 7 and 8 show the experimental results of the DCTP and the other existing methods in terms of average precision and average recall versus the number of top matches.

| Methods | Top number of images retrieved | ||||

|---|---|---|---|---|---|

| 2 | 4 | 6 | 8 | 10 | |

| HOG | 64.46 | 60.48 | 54.2 | 43.4 | 35.9 |

| HSV | 69.93 | 65.85 | 53.79 | 48.79 | 42.01 |

| LBP | 78.64 | 78.9 | 70.87 | 64.7 | 57.35 |

| LTrP | 79.84 | 71.35 | 68.53 | 58.8 | 56.8 |

| LOCTP | 82.64 | 76.05 | 68.1 | 62.62 | 58 |

| DCTP | 84.9 | 77.89 | 67.55 | 62.21 | 58.7 |

Table 4. Average precision (in %) for top number of images retrieved in DB1 database.

| Methods | Average Precision | Average Recall |

|---|---|---|

| HSV | 69.93 | 42.01 |

| HOG | 64.46 | 35.9 |

| LBP | 78.64 | 57.35 |

| LTrP | 79.84 | 56.8 |

| LOCTP | 82.64 | 58 |

| DCTP | 84.06 | 58.7 |

Table 5. Average precision and average recall of database DB2.

For DB2, the average precision for DCTP gives an improvement of 5.42 from LBP, 4.22 from LTrP, 1.42 from LOCTP, 19.6 from HOG and 14.13 from HSV, and average recall for DCTP gives improvement of 1.35 from LBP, 1.9 from LTrP, 22.9 from HOG and 16.69 from HSV histogram. Thus, this work provides significant result for the DB2 database.

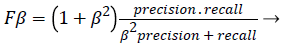

The feature vector length and computation times for feature extraction and query retrieval of DB1 and DB2 databases are given in Table 6. From this it can be observed that feature vector length of the proposed work is found to be less than almost all the other methods except HSV and LBP. The computation times of DCTP are also almost equal or less than that of the other methods. The performance shows significant improvement from all the other methods. The evaluation of the system’s performance can also be done with the single combined metric derived from the precision and recall. F-score (Fβ) [84] is used for this, which can be defined as in Equation (17).

| Methods | Feature Vector Length | Computation time (in seconds) | |||

|---|---|---|---|---|---|

| DB1 | DB2 | ||||

| Feature Extraction | Query Retrieval | Feature Extraction | Query Retrieval | ||

| HOG | 3249 | 224.88 | 102.1 | 93.84 | 12.33 |

| HSV | 32 | 215.71 | 20.57 | 423.2 | 12.2 |

| LBP | 59 | 25.61 | 176.43 | 132.3 | 14.4 |

| LTrP | 13 × 59 | 62.39 | 472.73 | 398.4 | 21.2 |

| LOCTP | 3 × 13 × 59 | 139.8 | 1239.15 | 1020.84 | 49.52 |

| DCTP | 3 × 59 | 27.88 | 211.3 | 201.20 | 16.36 |

Table 6. Feature vector length and computation times for feature extraction and query retrieval of DB1 and DB2 databases.

(17)

(17)

β defines weight, which should be given to the variables, recall and precision. F-score should be a number between 0 and 1, with 1 representing a perfect retrieval system that is completely robust and completely discriminant (100% precision and 100% recall). β=0.5 is used in this work, which gives twice as much importance for precision as to recall. As elaborated by Lin [85], the concordance correlation coefficient (CCC) is also an appropriate index for measuring agreement. CCC gives the fit between the precision and accuracy. From Table 7, it is evident that F-score and CCC of DCTP also gives significant improvement over other methods.

| Method | Measure | LBP | LTrP | HSV | HOG | LOCTP | DCTP |

|---|---|---|---|---|---|---|---|

| DB1 | F-score | 0.82 | 0.82 | 0.24 | 0.46 | 0.94 | 0.98 |

| CCC | 0.84 | 0.84 | 0.25 | 0.48 | 0.95 | 0.97 | |

| DB2 | F-score | 0.64 | 0.64 | 0.41 | 0.44 | 0.60 | 0.77 |

| CCC | 0.65 | 0.65 | 0.4 | 0.43 | 0.68 | 0.78 |

Table 7. F-score and CCC for the database DB1 and DB2.

Conclusion

In this paper, we propose a novel method for effective feature extraction. The key idea resides in the extraction of texture features from the separate color spaces, which helps to get the inter-relation between the color spaces. The superiority of our approach comes from four aspects: i) the block-based calculation yields the local orientation of pixels by extracting the relation between the mid and neighbouring pixels, ii) since there are three different interactions (in RGB plane, in GBR plane and in BRG plane) for a single block, it helps to extract the features in a better way, iii) the introduction of three sequences of patterns for different rows add additional effect on the uniqueness of feature vector and iv) the introduction of buffer value maximizes the effect of extracted feature. The experimental analysis on both DB1 and DB2 databases shows significant improvement in results over the other previous works. In terms of F-score and CCC, DCTP gives more variation in result than that of LBP, LTrP, HSV, HOG and LOCTP in both DB1 and DB2 databases. Since this work extracts the local features from inter-channel information with less computational complexity, this feature fits for various applications like multi-modal biometrics, tumour identification, character recognition, Bigdata analysis etc.

Acknowledgement

We would like to thank the anonymous reviewers for their valuable comments and suggestions. Our thanks are due to Dr. S. Arulkrishnamoorthy for his linguistic consultancy.

References

- Smith JR, Chang SF. Automated image retrieval using color and texture, Columbia University, Technical Report CU/CTR 408_95_14, 1995.

- Su CH, Chiu HS, Hsieh TM. An efficient image retrieval based on HSV color space. Elect Control Eng 2011.

- Ma JQ. Content-Based Image Retrieval with HSV Color Space and TextureFeatures. International Conference on Web Information Systems and Mining 2009.

- Han J, Ma KK. Fuzzy color histogram and its use in color image retrieval. IEEE Trans Image Process 2002; 11: 944-952.

- Vadivel A, Shamik S, Majumdar AK. An integrated color and intensity co-occurrence matrix. Pattern Recogn Lett 2007; 28: 974-983.

- Huang J, Kumar S.R, Mitra M. Combining supervised learning with colorcorrelograms for content-based image retrieval. In Proceedings 5th ACM Multimediaconference 1997.

- Huang J, Kumar SR, Mitra M. Image Indexing Using Colour Correlograms. Proc IEEE Conf on Computer Vision and Pattern Recognition 1997.

- van de Sande KE, Gevers T, Snoek CG. Evaluating color descriptors for object and scene recognition. IEEE Trans Pattern Anal Mach Intell 2010; 32: 1582-1596.

- Swain M, Ballard DH. Indexing via color histograms. Proc 3rd intconfcomput vision, Rochester Univ, 1991.

- Stricker M, Orengo M. Similarity of color images, Proceedings of SPIE-Storage Retrieval Image Video Database 1995.

- Pass G, Zabih R, Miller J. Comparing images using color coherence vectors. In Proceedings of 4th ACM multimedia conf, Boston, Massachusetts, 1997.

- Zhang L, Wang L, Lin W. Semisupervised biased maximum margin analysis for interactive image retrieval. IEEE Trans Image Process 2012; 21: 2294-2308.

- Scott GJ, Klaric MM, Davis CH. Entropy-Balanced Bitmap Tree for Shape-Based Object Retrieval From Large-Scale Satellite Imagery Databases. IEEE Trans Geosci Remote Sensing 2011; 49: 1603-1616.

- Belongie S, Malik J, Puzicha J. Shape Matching and Object Recognition using Shape Contexts. IEEE Trans Pattern Anal Machine Intell 2002; 24: 509-522.

- Pan YF, Hou X, Liu CL. A hybrid approach to detect and localize texts in natural scene images. IEEE Trans Image Process 2011; 20: 800-813.

- Vizireanu DN, Halunga S, Marghescu G. Morphological skeleton decompositioninterframe interpolation method. J Electr Imaging 2010; 19: 1-3.

- Vizireanu DN. Morphological shape decomposition interframe interpolation method. J Electr Imaging 2008; 19: 1-5.

- Vizireanu DN. Generalizations of binary morphological shape decomposition. J Elect Imaging 2007; 16: 1-6.

- Distasi R, Nappi M, Tucci M. FIRE: fractal indexing with robust extensions for image databases. IEEE Transact Image Process 2003; 12: 373-384.

- Kouzani AZ. Classification of face images using local iterated function systems. Machine Vision Appl 2008; 19: 223-248.

- Pi M, Li H. Fractal indexing with the joint statistical properties and its application in texture image retrieval. IET Image Processing 2008; 2: 218-230.

- Mandelbrot B. Fractals-forms, chance and dimension. W. H. Freeman and Company, UK, 1997.

- Shechtman E, Irani M. Space-time behavior-based correlation-Or-how to tell if two underlying motion fields are similar without computing them? IEEE Trans Pattern Anal Mach Intell 2007; 29: 2045-2056.

- Chatfield K, Philbin J, Zisserman A. Efficient retrieval of deformable shape classes using local self-similarities. In NORDIA Workshop at ICCV, 2009.

- Manjunath BS, Ma WY. Texture Features for browsing and Retrieval of Image Data. IEEE Transact Pattern Anal Machine Intell 1996; 18: 837-842.

- Lerski R, Straughan K, Shad L, Boyce D, Bluml S, Zuna I. MR Image Texture Analysis-An Approach to Tissue Characterisation. Magnetic Resonance Imaging 1993; 11: 873-887.

- Manjunath BS, Ohm JR, Vasudevan V, Yamada A. Color and Texture descriptors. IEEE Transact Circuits Syst Video Technol 2001; 11: 703-715.

- Abbadeni N. Computational Perceptual Features for Texture Representation and Retrieval. IEEE Transact Image Process 2011; 20: 236-246.

- Howe NR, Huttenlocher DP. Integrating Colour, Texture and Geometry for Image Retrieval. Proc IEEE Conf Computer Vision Pattern Recognition 2000; 2: 239-246.

- Strzelecki M. Segmentation of Textured Biomedical Images Using Neural Networks. IEEE Workshop on Signal Processing, Poznan, 1995.

- Heikklä M, Pietikäinen M. A texture-based method for modeling the background and detecting moving objects. IEEE Trans Pattern Anal Mach Intell 2006; 28: 657-662.

- Heikkila M, Pietikainen M, Schmid C. Description of interest regions with localbinary patterns. Pattern Recognit 2009; 42: 425-436.

- Ojala T, Pietikainen M, Harwood D. A comparative study of texture measures with classification based on feature distributions. Pattern Recognit 1996; 29: 51-59.

- Xiaoyang A, Triggs B. Enhanced local texture feature sets for face recognition under difficult lighting conditions. IEEE Trans Image Process 2010; 19: 1635-1650.

- Smith JR, Chang SF. Automated binary texture feature sets for image retrieval. In Proc IEEE Intconf Acoustics, Speech and Signal Processing, Columbia Univ, New York, 1996.

- Moghaddam HA, Saadatmand TM. Gabor wavelet correlogram algorithm for image indexing and retrieval. 18th int conf pattern recognition, KN Toosi Univ of Technol, Iran, 2006.

- Kokare M, Biswas PK, Chatterji BN. Texture image retrieval using rotated Wavelet Filters. Pattern Recognit Lett 2007; 28: 1240-1249.

- Kokare M, Biswas PK, Chatterji BN. Texture image retrieval using new rotated complex wavelet filters. IEEE Trans Syst Man Cybern B Cybern 2005; 35: 1168-1178.

- Kokare M, Biswas PK, Chatterji BN. Rotation-invariant texture image retrieval using rotated complex wavelet filters. IEEE Trans Syst Man Cyber 2006; 336: 1273-1282.

- Soyel H, Demirel H. Localized discriminative scale invariant feature transform based facial expression recognition. Comput Electr Eng 2012; 38: 1299-1309.

- Gonde AB, Maheshwari RP, Balasubramanian R. Multi-scale ridgelet transform for content-based image retrieval. In IEEE int advance computing conf, Patial, India, 2010.

- Guo Z, Zhang L, Zhang D. A completed modeling of local binary pattern operator for texture classification. IEEE Trans Image Process 2010; 19: 1657-1663.

- Guo Z, Zhang L, Zhang D. Rotation invariant texture classification using LBP variance (LBPV) with global matching. Pattern Recognit 2010; 43: 706-719.

- Li M, Staunton RC. Optimum Gabor filter design and local binary patterns for texture segmentation. Pattern Recognit Lett 2008; 29: 664-672.

- Lategahn H, Gross S, Stehle T, Aach T. Texture classification by modeling joint distributions of local patterns with gaussian mixtures. IEEE Trans Image Process 2010; 19: 1548-1557.

- Liao S, Law MW, Chung AC. Dominant local binary patterns for texture classification. IEEE Trans Image Process 2009; 18: 1107-1118.

- Joan S, Rosenfild A. An application of texture analysis to materials inspection. Pattern Recognit 1976; 8: 195-200.

- Ojala T, Pietikainen M, Maenpaa T. Multiresolutiongray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans Pattern Anal Mach Intell 2002; 24: 971-987.

- Zhang B, Gao Y, Zhao S. Local Derivative Pattern Versus Local Binary Pattern: Face Recognition With High-Order Local Pattern Descriptor. IEEE Trans Image Process 2010; 19: 533-544.

- Murala S, Maheshwari RP, Balasubramanian R. Local Tetra Patterns: A New Feature Descriptor for Content-Based Image Retrieval. IEEE Transact Image Process 2012; 21: 2874-2886.

- Wang JZ, Li J, Wiederhold G. SIMPLIcity: Semantics-Sensitive Integrated Matching for Picture Libraries. IEEE Trans Pattern Anal Machine Intell 2001; 23.

- http://www.isy.liu.se/cvl/Projects/VISIT-bjojo/survey/surveyonCBIR/node26.html

- Manjunath BS. NeTra: A toolbox for navigating large image databases. Multimedia Systems 1999; 7: 184-198.

- Gevers T, Smeulders A. PicToSeek: A Content Based Image Search System for the World Wide Web, Proc. Visual 97, Knowledge Systems Institute, Chicago, 1997.

- http://hd.media.mit.edu/tech-reports/TR-255.pdf

- Julesz B. Experiments in the visual perception of texture. Sci Am 1975; 232: 34-43.

- Aralick RM, Shanmugam K, Dinstern S. Textural Features for Image Classification. IEEE Transact Syst, Man Cybernatics 1973; 3: 610-621.

- Weszka JS, Rosenfild A. An application of texture analysis to materials inspection. Pattern Recognit 1976; 8: 195-200.

- Unser M. Local linear transforms for texture measurements. Signal Process 1976; 11: 61-79.

- Belajules Z. Texton theory of two- dimensional and three dimensional vision.

- Proceedings SPIE Processing and Display of Three Dimensional Data 1983.

- Haralick R. Statistical and Structural Approaches to Texture. Proceedings of IEEE 1979; 5: 786- 804.

- Levine M. Vision in Man and Machine, McGraw-Hill, New York, 1985.

- Kekre HB, Sarode TK, Thepade SD, Suryavanshi V. Improved Texture Feature Based Image Retrieval using Kekre’s Fast Codebook Generation Algorithm. International Conference on Contours of Computing Technology (Thinkquest-2010), BabasahebGawde Institute of Technology, Mumbai, India, 2010.

- Marr D. A Computational Investigation Into the Human Representation and Processing of Visual Information, Freeman and Co., New York, 1982.

- Drimbarean A, Whelan PF. Experiments in colour texture analysis. Pattern Recognit Lett 2001; 22: 1161-1167.

- Paschos G. Perceptually uniform color spaces for color texture analysis: an empirical evaluation. IEEE Transact Image Process 2001; 10: 932-937.

- Serra J. Image analysis and mathematical morphology, Academic Press, London, 1982.

- Chen Y, Dougherty E. Grey-scale morphological granulometric texture classification, Optical Eng 1994; 8: 2713-2722.

- Ahmadian A, Mostafa A, Abolhassani M, Salimpour Y. A texture classification method for diffused liver diseases using Gabor wavelets. Conf Proc IEEE Eng Med Biol Soc 2005; 2: 1567-1570.

- Unser M. Texture classification by wavelet packet signatures. IEEE Transact Pattern Anal Machine Intell 1993; 15: 1186-1191.

- Tan X, Triggs B. Enhanced local texture feature sets for face recognition under difficult lighting conditions. IEEE Transact Image Process 2010; 19: 1635-1650.

- Howe NR, Huttenlocher DP. Integrating color, texture and geometry for image retrieval. Proceedings IEEE Conference on Computer Vision and Pattern Recognition 2000.

- Subrahmanyam M, Wu QMJ, Maheshwari RP, Balasubramanian R. Modified color motif co-occurrence matrix for image indexing and retrieval. Comput Elect Eng 2013.

- Kaiser PK, Boynton RM. Human color vision. Am Optical Soc 1996.

- Jain A, Healey G. A multiscale representation including opponent color featuresfor texture recognition. IEEE Transact Image Process 1998; 7: 124-128.

- Wandell PB. Appearance of colored patterns: pattern-color separability. Am J Optical Soc 1993; 10: 2458-2470.

- http://biometrics.idealtest.org

- http://vi-ww.cs.umass.edu/~vidit/IndianFaceDatabase

- Esmaeili MM, Fatourechi M, Ward RK. A Robust and Fast Video Copy Detection System Using Content-Based Fingerprinting. IEEE Transact Informat Forensic Security 2011; 6: 213-225.

- Jacob JI, Srinivasagan KG, Jayapriya K. Local oppugnant color texture pattern for image retrieval system. Pattern Recognit Lett 2004; 42: 72-78.

- Acharya UR, Sree SV, Krishnan MM, Molinari F, Saba L, Ho SY, Ahuja AT, Ho SC, Nicolaides A, Suri JS. Atherosclerotic risk stratification strategy for carotid arteries using texture-based features. J Ultrasound Med Biology 2012; 38: 899-915.

- Mookiah MRK, Acharya UR, Martis RJ, Chua CK, Lim CM, Ng EYK, Laude A. Evolutionary algorithm based classifier parameter tuning for automatic diabetic retinopathy grading: A hybrid feature extraction approach. Knowledge-Based Systems 2013; 39: 9-22.

- Acharyaa UR, Sreeb SV, Krishnana MMR, Molinaric F, Garberogliod R, Surib JS. Non-invasive automated 3D thyroid lesion classification in ultrasound: a class of ThyroScan™ systems. Ultrasonics 2012; 52: 508-520.

- Mani ME, Fatourechi M, Ward RK. A robust and fast video copy detection system using content-based fingerprinting. IEEE Transact Informat Forensics Security 2011; 6: 213-226.

- Crawford SB, Kosinski AS, Lin HM, Williamson JM, Barnhart HX. Computer programs for the concordance correlation coefficient. Comput Methods Programs Biomed 2007; 88: 62-74.