Research Article - Biomedical Research (2017) Volume 28, Issue 6

A novel method for multi-modal fusion based image embedding and compression technique using CT/PET images

Saranya G* and Nirmala Devi S

Department of Electronics and Communication Engineering, Anna University, Chennai, Tamil Nadu, India

- *Corresponding Author:

- Saranya G

Department of Electronics and Communication Engineering

Anna University, Tamil Nadu, India

Accepted date: November 18, 2016

Abstract

Image embedding has a wide range of applications in the medical field. This method is helpful in securing the information of the patients from the intruders with high storage capacity. The medical images of different modalities like CT and PET along with Patient Medical Image (PMI) can be sent to the physicians across the world for the diagnosis. Due to the bandwidth and storage constraint, medical images must be compressed before transmission and storage. This paper presents an evaluation on fusion based image embedding and reconstruction process for CT and PET images. The comparison of image fusion technique is processed using Wavelet Transform (WT) and Complex Contourlet Transform (CCT) and the analysis of compression method is estimated by using Run Length Encoding (RLE) and Huffman Encoding (HE) respectively. The proposed method is helpful in securing the patient information and it provides high hiding capacity for storage in the hospital digital database with improved values of MSE and PSNR.

Keywords

Complex contourlet transform, Image registration, Image fusion, Image embedding, Image compression, Image reconstruction, Wavelet transform, Run length encoding, Huffman encoding.

Introduction

Due to the advanced development in the applications of medical imaging system, image fusion scheme is playing a vital role in diagnostics and better treatment. The aim of this image fusion is to locate the tumour part from the source image. Fused images are used to provide the edge details and the necessary information from each and every individual gray scale by combining the source images CT and PET. Registration of medical images is the most important aspect in image fusion technique. It provides an efficient geometrical transformation between two data sets, by matching the PET image data set to CT image data set. Hence, the output fused image will provide the complete information from source images, without any artefacts or inconsistencies.

Image compression technique is widely used in all applications like huge data storage, carrying and retrieval such as for multimedia, documents, video conferencing, and medical imaging. The main aim of the image compression technique is to reduce the redundancy for storage or to transmit the data in an efficient way. Hence, this results in the reduction of file size in hospital digital database. Image compression is categorized into two grouping: lossless and lossy compression. In the lossless method, the exact existing data can be recovered, while in the loss compression, only a near approximation of the existing data can be obtained.

In this work, a new approach is performed using Complex Contourlet Transform (CCT) which reduces the computational complexity and improves the image quality. CCT has basically two major steps [1]. At the initial stage, DT-CWT (Dual Tree- Complex Wavelet Transform) is mainly used for multiresolution decomposition stage level which gives six directional sub-bands on each scale of the detailed coefficient sub-space. Each individual has a real and an imaginary part of wavelet coefficients.

At the second stage, DFB (Directional Filter Bank) is used for multi-directional decomposition to group the locally correlated coefficients which are captured by DT-CWT. After these two stages, the Complex Contourlet Transform (CCT) provides the low frequency and high frequency bands. The obtained new transform combines the properties of NSCT (Non-Sub Sampled Contourlet Transform) (i.e. multi-resolution, localization, directionality, and anisotropy) [2] accompanied by DT-CWT (Dual-Tree Complex Wavelet Transform) (i.e. translation invariant, directionality). Therefore, it is computationally more methodical than the other transform methods.

The performance of the proposed algorithm has been compared with some other usual compression qualities like RLE and Huffman method. Quality measurements similar to Peak Signal to Noise Ratio (PSNR), Compression Ratio (CR), and Space Saving (SS) have been estimated to decide the quality of the compressed image.

Proposed Methodology

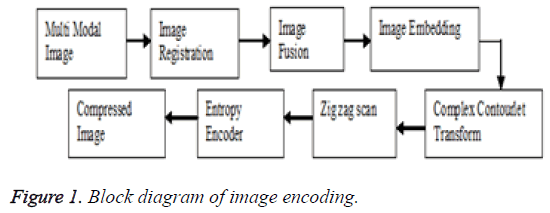

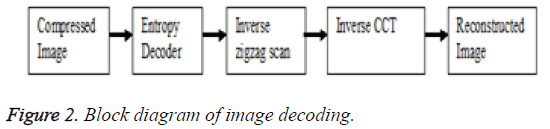

This section illustrates the proposed method for fusion based image embedding and reconstruction techniques. Multi-modal images like CT and PET are registered properly and then the resulting registered images are fused using different transform methods. At the encoder block, two-dimensional Complex Contourlet Transform (CCT) is first applied to the fused image. The transform coefficients are then entropy coded before forming the output code stream. In the decoder block, the code stream is first entropy decoded and inverse the Complex Contourlet Transform (ICCT). Thus, the resulting reconstructed image is obtained in the decoded block. The block diagram of image encoding and decoding for the proposed method is shown in Figures 1 and 2.

Image registration

Medical images are taken from different imaging devices like Computed Tomography (CT) along with Positron Emission Tomography (PET) at a different time or different perspectives. Variation in patient orientation, dissimilarity in resolution and variation of the modalities can make it difficult for a clinician mentally to fuse all the image information accurately. For this justification, there has been considerable interest in using image registration technique to transfer all the acquired image details into a common coordinate frame.

Registration of medical images is the most important aspect of image fusion technique. It provides an efficient geometrical transformation between two data sets, by matching the PET image data set to CT image data set. Hence, the output fused image will provide the complete information from source images, without any artifacts or inconsistencies.

Image fusion

Image fusion is an important feature for medical diagnostics and treatment. It is created by combining details from multiple modalities which can provide the most standard details of both physiological and anatomical with a large spatial resolution which is used for clinical diagnosis and therapy purpose. It reduces uncertainty and redundancy in the output while maximizing relevant details from two or more images of a scene into one composite image that is more informative and most suitable for visual perception or processing task like medical imaging, remote sensing, and biometrics, etc.

Read two or more images and decompose the images using different transforms like WT and CCT [3-8]. Apply the highest coefficient from each sub-band fusion rule to the decomposed coefficients. Apply inverse transform for fused transform coefficients to renovate the resultant fused image.

Low pass sub band:

CT image=(ALow1+BLow1) ÷ 2 → (1)

PET image=(ALow2+BLow2) ÷ 2 → (2)

High pass sub band:

Decision map=(abs (AHigh) ≥ abs (BHigh))

The decomposed CT source image and PET registered image is divided into a low-frequency component and a high-frequency component. The efficient average fusion rule is applied to the low-frequency component and the highest energy fusion rule is applied to the high-frequency component which is given in Equations 1 and 2, then to renovate the original fused output Inverse Complex Contourlet Transform (ICCT) is applied.

Embedding and retrieving algorithm

• Choose the cover image, and decompose the image by using CCT block.

• Load the fused image to be hidden, and decompose the image by using CCT block.

• Calculate the pixel values of the defined sub-band coefficient for the cover image and the loaded fused image from the decomposed CCT block to obtain the embedded image.

• Apply the zigzag scan to form low-frequency coefficient in the top and high-frequency coefficient at the bottom for the better-compressed image, and estimate the parameters of Compression Ratio (CR) and Space Saving (SS).

• Apply the ICCT block to reconstruct the original embedded image.

Image compression

Image compression technique is about reducing the amount of data required to represent an image. In this work, zigzag scan [9,10] with RLE and Huffman techniques are used to decrease the space and improve the efficiency to transfer the image over the network for better access by using Wavelet Transform (WT) and Complex Contourlet Transform (CCT).

Wavelet transform (WT) [11-13] is one of the methods to represent the medical image. It allows multi-resolution analysis of an image. The main aim of this transform is to gather relevant information from an image. It has a better modification in representing non-stationary image signal and its ability to adapt human visual characteristics. Due to the 1D base, wavelets in higher dimensions can only gather less directional data, because it provides only three basic directional components, namely horizontal, vertical and diagonal. It uses a Directional Filter Bank (DFB) which involves non-separable filtering and sampling. Hence, the major drawback in wavelet transform is less set of direction, shape, and high computational complexity.

Complex Contourlet Transform (CCT) is very successful in detecting image activities along curves while examining images at multiple scales, location, and orientations. The complex contourlet transform exhibit the following important characteristics such as multi-resolution, localization, translation invariant, directionality, and anisotropy [1,14].

A comparative study is performed for WT and CCT analysis in terms of result such as Peak Signal to Noise Ratio (PSNR), Compression Ratio (CR), and Space Saving (SS). After image compression technique Inverse Complex Contourlet Transform (ICCT) is applied to renovate the original image without any loss in information.

Results and Discussion

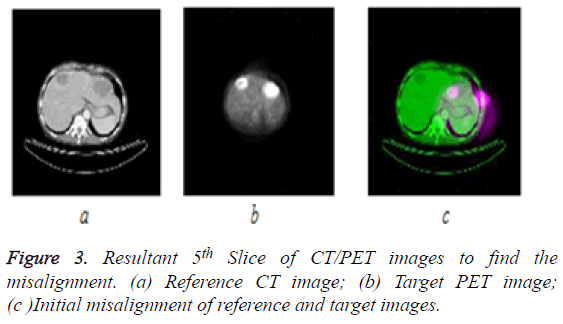

In this section, experimental evaluation is carried out for the different type of images such as CT/PET images using WT and CCT. CT and PET images are chosen for image registration, fusion, embed, compression and reconstruction techniques. There are totally 5 slices of CT/PET multi-modal images, but the resultant 5th slice (CT and PET) images are taken for discussion and it is shown in Figure 3.

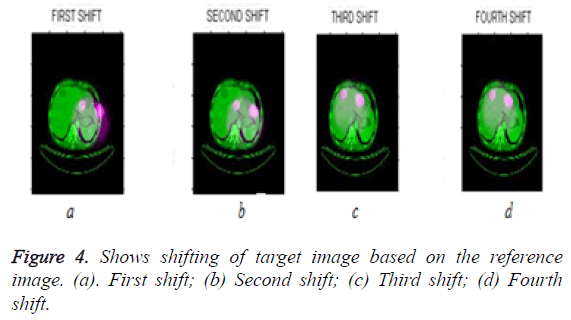

Measure the misalignment or difference between two images (Reference CT and Target PET) then perform the transformation or shifting of the target image based on the reference image. The process of the 5th slice (CT and PET) image registration is shown in Figure 4.

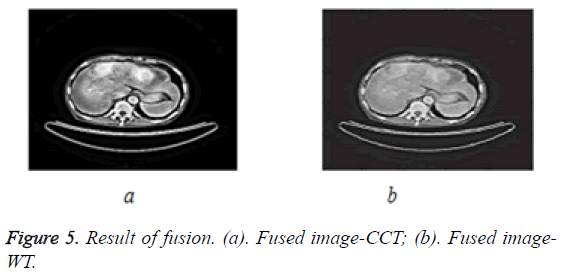

After the alignment of target (PET) image based on the reference image (CT), image fusion for reference and registered image (Fourth Shift) are performed. Image fusion is shown in Figure 5.

Image fusion is performed using Wavelet Transform (WT) and Complex Contourlet Transform (CCT) then entropy and mutual information are calculated. The resultant multi tumor part is located as well as evaluated and validated by the radiologist. The evaluated tabulation is shown in Table 1.

| Parameters | Wavelet Transform (WT) | Complex Counterlet Transform (CCT) |

|---|---|---|

| Entropy | 3.4162 | 3.6921 |

| Mutual information | 3.704 | 4.4029 |

Table 1: Entropy and mutual information values for input and output fused images using different transform.

Entropy is used to assess an amount of details contain in an image, comparing entropy value for the output fused image and mutual information [15] is the relative entropy between input and output fused image which justifies that the CCT output image which is obtained by fusion contains more information when compared with the WT. Hence, the total 5 slices of CT/PET image entropy value and mutual information values are tabulated to select the particular slice for compression technique which is shown in Table 2.

| Slices | Entropy value | Mutual information | ||

|---|---|---|---|---|

| WT | CCT | WT | CCT | |

| 1 | 3.2267 | 3.50661 | 3.3976 | 4.0411 |

| 2 | 3.2505 | 3.51584 | 3.3977 | 4.114 |

| 3 | 3.3529 | 3.5856 | 3.671 | 4.304 |

| 4 | 3.3614 | 3.66233 | 3.7324 | 4.3212 |

| 5 | 3.4162 | 3.6921 | 3.704 | 4.4029 |

Table 2: Entropy and mutual information values for all slices.

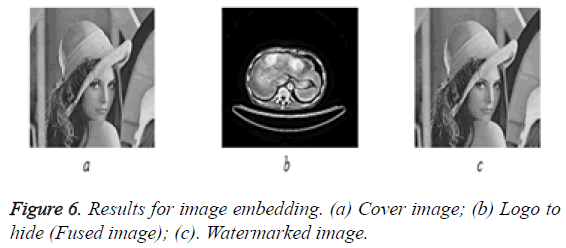

Slice selection is performed based on the highest entropy value and highest mutual information value. From the Table 2, the 5th slice of CT/PET images have higher entropy and mutual information value, hence it is concluded that 5th slice contains more information compared to other slices. After slice selection, image embedding, compression and reconstruction techniques are performed using WT and CCT. The experimental results for embedding image are shown in Figure 6. RLE and Huffman compression methods are tabulated in Tables 3 and 4.

| Entropy encoding | Wavelet Transform (WT) | ||||||

|---|---|---|---|---|---|---|---|

| Compression technique | Dimension | Uncompressed size | Compressed size | MSE | PSNR | Compression ratio | Space saving |

| RLE | 256 ×256 | 65536 | 846 | 1.9435e+03 | 5.2789 | 77.4657:1 | 98.70% |

| HUFFMAN | 256 ×256 | 65536 | 423 | 4.5346e+03 | 11.5994 | 154.9314:1 | 99.35% |

Table 3: Image compression using wavelet transforms.

| Entropy encoding | Complex Contourlet Transform (CCT) | ||||||

|---|---|---|---|---|---|---|---|

| Compression technique | Dimension | Uncompressed size | Compressed size | MSE | PSNR | Compression ratio | Space saving |

| RLE | 256 ×256 | 65536 | 802 | 1.8168e+04 | 5.5717 | 81.7157:1 | 98.77% |

| HUFFMAN | 256 ×256 | 65536 | 401 | 4.2367e+03 | 11.8495 | 163.4314:1 | 99.38% |

Table 4: Image compression using Complex Contourlet Transform (CCT).

The result of compression methods on the basis of a parameter such as Mean Square Error (MSE), Peak Signal to Noise Ratio (PSNR), Compression Ratio (CR), Space Saving (SS) are compared. From Tables 3 and 4, it is concluded that Complex Contourlet Transform (CCT)-Huffman based analysis shown is having improved CR and SS compared to RLE compression method.

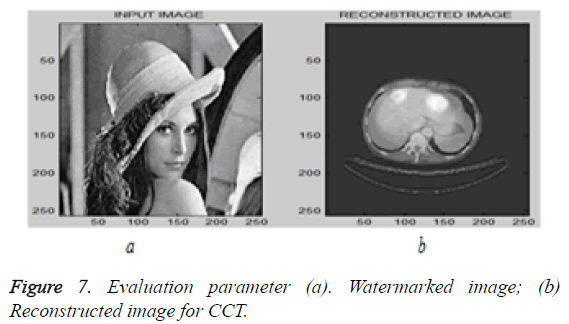

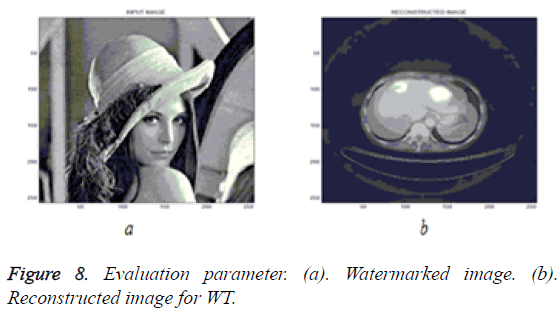

After this compression technique, Inverse Complex Contourlet Transform (ICCT) is applied to renovate the image and the results are shown in Figures 7 and 8. The resultant evaluation parameters are shown in Table 5.

| Transforms | MSE | PSNR | MSE | PSNR |

|---|---|---|---|---|

| I/P and Fused | I/P and Fused | I/P and Reconstructed | I/P and Reconstructed | |

| WT | 4.2398e+03 | 11.8914 | 1.7613e+04 | 5.7065 |

| CCT | 112.3007 | 27.661 | 2.631e-27 | 30.3943 |

Table 5: Parameter evaluation for reconstruction image.

Conclusion

In this paper, results of a novel fusion based image embedding and reconstruction are performed for CT/PET images. From the results, it is concluded that Complex Contourlet Transform (CCT) based embedding is better than another transform and Complex Contourlet Transform (CCT) with Huffman coding based image compression is better than WT based compression. WT cannot perform well when the edges are a smooth curve. CCT is very successful in detecting image activities along the curve while analysing image at multiple scale, location, and orientation. And also, it is concluded that the 5th slice of CT/PET image shows improved entropy and mutual information out of five slices and the multi tumor detected in the image are evaluated and validated by the radiologist.

Acknowledgment

Our sincere thanks to Dr. Chezhian J. M.D (RD)., DCH., Senior Assistant Professor, Barnard Institute of Radiology, Madras Medical College and Rajiv Gandhi Government General Hospital, Chennai.

References

- Li M, Wang T. The non-sub sampled complex contourlet transform for image denoising. IEEE Comp EngTechnolConf Chengdu 2010; 267-270.

- Do MN, Vetterli M. The contourlet transform: an efficient directional multi resolution image representation. IEEE Trans Image Proc 2005; 14: 2091-2096.

- Saranya G, Nirmala Devi S. An efficient approach for Image fusion technique using different transform methods. Int J Imag Robot 2015; 15: 70-80.

- Praveen V, Manoj K, Maheedhar D. Multi focus image fusion using wavelet transform. Int J Electr Comp SciEng 2012; 1: 2112-2118.

- Indira KP, Rani Hemamalini R. Impact of co-efficient selection rules on the performance of DWT based fused on medical image. IntConf Robot AutomContrEmb Sys 2015; 1-8.

- Yong Y, Song T, Shuying H.Multi focus image fusion based on NSCT and focused area detection. IEEE Sens J 2015; 15: 2824-2838.

- VPS Naidu, Raol JR. Pixel-level image fusion using wavelet and principal component analysis-A comparative analysis. Defense Sci J 2008; 58: 338-352.

- Gurdeep S, Naveen G. Gray scale image fusion using modified contourlet transform. Int J Adv Res Comp SciSoftwEng 2013; 3: 806-810.

- Sadhana S, Manvi M, Prabhakar G. Image compression on biomedical images using predictive coding with the help of ROI. IntConf Sign ProcIntegNetwInd 2015; 120-125.

- Arvind K, Ashutosh S. Comparative analysis of wavelet transforms algorithms for image compression. IntConfCommun Sig Proc India 2014; 414-418.

- Jingjing X, Haoxing Y, Wusheng L. Compressed sensing on wavelet-based contourlet transform. IntConf Inform Technol Comp EngManagSci 2011; 75-78.

- Prabhakar T, Jagan NV, Lakshmi AG. Image compression using DCT and wavelet transformations. Int J Sig ProcImagCompres Pat Recogn 2011; 4: 61-74.

- Sonja G, Mislav G, Branka Z. Performance analysis of image compression using wavelets. IEEE Trans IndustrElectr 2001; 48: 682-695.

- Sandeep K, Nitin G, Vedpal S. Fast and efficient medical image compression using contourlet transform. J Comp Sci 2013; 1: 7-13.

- Shruti G, Karthik RP, Erik BP. Mutual information metric evaluation for PET/MRI Image fusion. AerospElectrConf NAECON IEEE Nat Dayton OH 2008; 305-311.